Improving Vietnamese Fake News Detection based on Contextual Language Model and Handcrafted Features

- University of Information Technology, Ho Chi Minh city, Vietnam Vietnam National University, Ho Chi Minh city, Vietnam

Abstract

Introduction: In recent years, the rise of social networks in Vietnam has resulted in an abundance of information. However, it has also made it easier for people to spread fake news, which has done a great disservice to society. It is therefore crucial to verify the reliability of news. This paper presents a hybrid approach that uses a pretrained language model called vELECTRA along with handcrafted features to identify reliable information on Vietnamese social network sites.

Methods: The present study employed two primary approaches, namely: 1) fine-tuning the model by utilizing solely textual data, and 2) combining additional meta-data with the text to create an input representation for the model.

Results: Our approach performs slightly better than other refined BERT methods and achieves state-of-the-art results on the ReINTEL dataset published by VLSP in 2020. Our method achieved a 0.9575 AUC score, and we used transfer learning and deep learning approaches to detect fake news in the Vietnamese language using meta features.

Conclusion: With regards to the results and analysis, it can be inferred that the number of reactions a post receives, and the timing of the event described in the post are indicative of the news' credibility. Furthermore, it was discovered that BERT can encode numerical values that have been converted into text.

INTRODUCTION

In today’s world, the internet is rife with an overwhelming amount of information, and social networking sites such as Facebook and Twitter are experiencing a significant surge in popularity and becoming more accessible to the general public. However, some individuals exploit these platforms by spreading unreliable information for their personal gain, such as earning more revenue from website clicks or influencing the community’s political agenda 1.

Due to the sensational nature of fake news, it is often difficult to detect its provocative intention, and if social media users do not read it carefully, fake news can spread rapidly, causing dire consequences for its victims, such as tarnishing people’s reputations. Major global events are closely associated with fake news, and since the outbreak of the COVID-19 pandemic in 2020, fake news related to the pandemic has been rampant. People living in countries with travel restrictions amid COVID-19 tend to believe rumors circulated within their communities without considering their validity. In their fear of the outbreak, readers tend to trust unconfirmed information about pandemic prevention on social media, which can be reckless2. This misinformation can lead to terrifying misunderstandings about any global event. Therefore, determining the reliability of news has gained remarkable attention to prevent the wave of false information.

In this study, we focused on classifying trustworthy news written in Vietnamese, which is a low-resource language. Therefore, we attempted to find the best solution to predict whether the news is reliable or unreliable by implementing Transformer-based architectures and conducting several experiments on fine-tuning strategies to improve the model’s performance.

RELATED WORKS

Fake news detection can be seen as a text classification task in which the input is a news article labeled either fake or real. Various machine learning and deep learning approaches have been attempted to detect fake news. Agarwal (2021)3 proposed adding the Bi-LSTM layer with attention to the contextual embedding layers of sentences for classifying trustworthy news in the English language. Monti . (2019) studied the efficiency of graph neural networks 4. Their architecture used a hybrid user activity and article’s information representations to feed into a four-layer Graph CNN network for predicting fake or real news.

Qi ., 2019 emphasized the vital role of visual content and proposed a deep learning approach called the multidomain visual neural network to differentiate between real and fake news based on a single image5. They designed a CNN-based network to capture patterns of fake news images in the frequency domain and used a joint CNN-RNN model to extract image features from different semantic levels in the pixel domain. An attention mechanism was employed to fuse the feature representations of frequency and pixel domains to detect fake news in Chinese.

In the Vietnamese domain, several works have been performed by Thanh and Van (2020), Trinh . (2021), Tran . (2020) 6, 7, 8 and Nguyen . (2020) 9 in a VLSP shared task. They leveraged the strength of Transformer-based models and fine-tuned them on linguistic features. Additionally, Hieu (2020) 10 applied PhoBERT embeddings to extract document content and used TF-IDF to encode them into vector representations fused with vectors of meta-data. These representations were used as inputs into tree-based methods, which are Random Forest, CatBoost and LightGBM, to predict label probabilities.

METHODOLOGY

In this section, we will present our approach to solve this problem. We chose the SOTA techniques, including the BERT model and its variants that we mentioned below. We will promote a new approach that describes meta-data provided in datasets as verbal words to enrich text information. As we noticed that numeric features can contribute a lot insights to our model and BERT can somewhat encode numbers well in medium ranges. Therefore, we will describe their values as text and combine it with the original text. Ultimately, we will put the combined text into our model and using advanced fine-tuning techniques for the text input.

Data processing

Fake news detection can encompass not only the contents of news but also related information. In the ReINTEL dataset, we applied preprocessing techniques to ensure the cleanliness of the data.

-

Extract timestamp features: We extracted timestamp features into days, months, years, hours and weekdays to enrich features for the dataset. These features were found to be helpful in the prediction accuracy of the models through data analysis.

-

Remove absurd data points: During the data collection process, there might be several outliers. As described in

Table 1 , the maximum value is greater than one billion, which is implausible. -

Fill missing data: After removing implausible data points, a significant number of missing values were encountered, as shown in

Table 2 . We decided to fill missing values with different strategies: mean and median value for three features involved in interaction including number of likes, number of comments and number of shares. Furthermore, we duplicated the feature values of the DateTime data for the most similar content compared to the text, including the missing DateTime value.

For the post message, we must pre-process it more carefully than the rest of the features because it is the most crucial feature for the model to understand the text’s content. The more thoroughly we process text, the more meaningful insights our models can extract and therefore improve their performance. Thus, we performed these preprocessing techniques on text content before loading them into the model:

-

HTL tags, redundant characters and stop words were removed.

-

Converted synonyms indicating one object into one consistent term. For instance, “Hoa Kỳ” was changed to “Mỹ”, meaning “USA” in Vietnamese, and “COVID-19” and “coronavirus” were changed to “COVID”.

-

PhoBERT

11 requires word segmentation, so we use VnCoreNLP12 to segment the input. -

We converted emojis and icons into the respective text describing them. For example, “☹((” into the token “negative”.

Meta-data description in the experimental dataset.

|

|

Num like post |

Num comment post |

Num share post |

|

Count |

4372 |

4372 |

4372 |

|

mean |

1456400000000 |

1088786 |

362830.6 |

|

Std |

48063100 |

41548540 |

23951710 |

|

Min |

1 |

0 |

0 |

|

25% |

9 |

0 |

2 |

|

50% |

38 |

43 |

22 |

|

75% |

432 |

33 |

87 |

|

max |

1592811000 |

1591690000 |

1583711400 |

Statistics of missing values in the dataset

|

Feature |

Train set |

Test set |

|

Id |

0 |

0 |

|

user_name |

0 |

0 |

|

post_message |

1 |

0 |

|

Num_like_post |

115 |

616 |

|

Num_comment_post |

10 |

677 |

|

Num_share_post |

725 |

742 |

|

image |

3085 |

1138 |

Data Analysis

To detect fake news, the content of news is a crucial element in determining its veracity. Therefore, we visualized the news content using word clouds to analyze and compare the differences between real and fake news. Based on Figure 1, we identified keywords related to the COVID-19 pandemic and noted some distinctions between reliable and unreliable news. Specifically, we observed the following:

-

Real news content tends to contain many URLs and hashtags, indicating a greater number of reliable and accredited sources.

-

Fake news content contains fewer credible sources but more keywords and negative icons. Additionally, since fake news is often fraught with a political purpose, we observed that the words ‘USA’ and ‘China’ appeared frequently in the word cloud. These two countries are global superpowers with opposing interests, making them the subject of many baseless speculations.

In addition to news content, we would like to plot the distribution of the number of reactions by fake and real news in Figure 2 after many preprocessing steps for these handcrafted features.

As we said in the previous section, fake news often has dramatically sensational content, which attracts many people to comment and lets them believe to share because readers have been overwhelmed emotionally without analyzing the content. For number of likes, those readers will read carefully until they agree with the content before deciding to press the “Like” button on that post. Following Qazvinian (2011) 5 and Jin . (2016) 13, they also found that common readers tend to express their biases and feelings toward fake news more than reliable news. Furthermore, we see that unreliable news often appears from 6 PM to 10 PM, the period when people mostly surf the internet. Conversely, real news appears throughout the day. Therefore, we believe that these features are important signs in addition to text content to predict whether news is real or not.

(a) Fake news’ word cloud (b) Real news’ word cloud.

(a) Number of likes between real and fake news (b) Number of shares between real and fake news (c) Number of comments between real and fake news

Pretrained Models

Due to the rapid development of deep learning, a multitude of pretrained language models are now available for Vietnamese, categorized as multilingual and monolingual. Because this dataset is a collection of Vietnamese news and we found that monolingual models outperform multilingual models on this dataset8, we decided to use some monolingual models for this language, ViBERT, vELECTRA14 and PhoBERT 11, for this task. This list below is a summarized introduction of them:

PhoBERT

vELECTRA

ViBERT

Fine-tuning approach

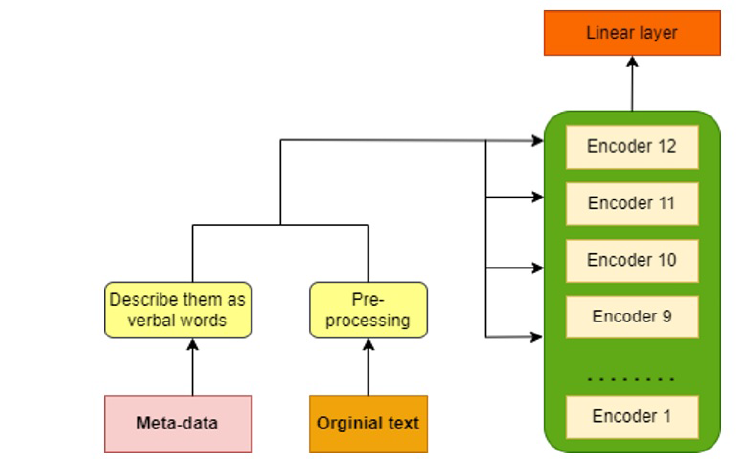

In this section, we present our fine-tuning approaches with a pretrained language model. We recognized that many teams in 2020 VLSP competitions used transfer learning methods, but most of them fine-tuned on textual features only 17 and ignored the other features, while determining fake news should be based on not only news content but also several components. Therefore, we decided to fine-tune them on text incorporating timestamp and reaction features by verbal words. For example, we have written sample sentences as follows: “This post was written on Friday, April 3rd at 8 PM. There are 19447 likes, 32 comments and 50 shares from this post” (in Vietnamese) to describe those features, and then we merge them with the original sentences to make new ones. We illustrated this process in Figure 3.

Our proposed network architecture. The input including original text and its along meta-data described as verbal words are put into 4 last hidden states in Transformer architecture to return a representation.

Our implementation is based on fine-tuning ideas proposed by 15 and 18. BERT-based models consist of 12 Transformer layers in the encoders, and the hidden size of each vector is 768. To fine-tune these architectures for classification, we must add the special tokens named [CLS] and [SEP] to the text input. The [CLS] token is encoded as contextual representation of the input, and the [SEP] is used as separation between sentences in the entire input string. Then, these representations from one or several layers are fed into a fully connected layer which contains a Softmax function to output probability of each label. In the classification part, we applied many advanced fine-tuning techniques: warm-up learning rate and unfreeze layers gradually in each training epoch.

According to previous works, we observed that lower layers in BERT architectures contain surface features and general information, and the information gradually obtains more granularity and complex semantic features in higher layers19, 20. Therefore, concatenating multiple layers together ensures that our information is both general and granular. In this case, we decided to concatenate the four last layers because it helps model obtain the best classification performance18.

Another factor that we need to consider is the text input’s length. Because BERT-based architecture’s input has a maximum length of 512 tokens. Moreover, we can only set the maximum length of 256 tokens due to computational computation. Therefore, we applied the “head tail” truncation method, which keeps the first 64 tokens and last 192 tokens because the key information usually comes from the beginning and ending of the entire text, which provides many meaningful insights.

We also intended to combine the image feature with the other features, including text, number of likes, comments and shares, into the model. However, the huge shortage of this information (

Training and testing procedures

In the training procedure, we fine-tune the model by feeding the encoded [CLS] token of the input information and use the concatenated representations from the four last hidden layers to a fully connected layer on top of BERT. During training, we use the binary cross entropy with logits loss (BCEWithLogits) to calculate the difference between the predicted model output’s logits and the actual label. In the gradual unfreezing method, we first freeze all layers and then fine-tune them after a few epochs. This method ensures that gradients are not updated at the first epoch because the low-level layers already have generally learned features; therefore, we will avoid overfitting.

In the testing phase, the saved model outputs distributional probabilities of labels. Because the test data is unlabeled, we must submit the output probabilities to the competition’s system due to their submission regulation.

EXPERIMENTS

Experimental configuration

We conducted experiments on Google Colab Pro with Tesla K80 GPU configuration, 13 GB RAM, and Intel Xeon CPU @ 2.2 GHz. We use the PyTorch

Evaluation Metrics

We use the Area Under the Receiver Operating Characteristic Curve for evaluation (AU– - ROC). This is a performance measurement for classification at various thresholds. ROC is a probability curve, and AUC represents the degree or measure of separability. It tells how much the model can distinguish classes.

EXPERIMENTAL RESULTS

Our experimental results are reported by AUC score metrics in

The performance of traditional machine learning algorithms on meta-data only

|

Method |

AUC |

|

Logistic Regression |

0.6948 |

|

Linear SVM |

0.7029 |

|

LightGBM |

0.6292 |

|

Catboost |

0.5602 |

|

Random Forest |

0.6014 |

The performance of models on original text and text combined with meta-data.

|

Pretrained model |

learning rate |

epochs |

AUC |

|

ViBERT |

3e-05 |

6 |

0.9324 |

|

vELECTRA |

1e-05 |

4 |

0.9390 |

|

PhoBERT |

6e-05 |

6 |

0.9259 |

|

ViBERT + meta-data |

3e-05 |

6 |

0.9380 |

|

vELECTRA + meta-data |

1e-05 |

4 |

0.9575 |

|

PhoBERT + meta-data |

6e-05 |

6 |

0.9519 |

As shown in

To make it clearer, we also conducted experiments on incorporating text with each feature to investigate which feature contributes best to the model’s performance. Furthermore, the values of meta-data will also be different for each filling value (mean and median), so we want to observe how strong the classification performance is with each different filling method. Many of these experimental results prevailed the top teams’ ones. As shown in

Experimental results of the v-ELECTRA – best model when incorporating different features and filling methods.

|

Mean filling |

Median filling | ||

|

Feature |

AUC |

Feature |

AUC |

|

Num like post |

0.9512 |

Num like post |

0.9448 |

|

Num share post |

0.9496 |

Num share post |

0.9439 |

|

Num comment post |

0.9492 |

Num comment post |

0.9429 |

|

Timestamp |

0.9422 |

Timestamp |

0.9421 |

|

All |

0.9575 |

All |

0.9450 |

Therefore, we have achieved state-of-the-art results on this dataset. We have summarized all previous results in

Results comparison between our best model with other teams

|

Team/Author |

AUC |

Model |

|

Ours |

0.9575 |

vELECTRA + meta-data |

|

(Pham et al., 2021) |

0.9538 |

PhoBERT + CNN + TF-IDF |

|

Kurtosis (⋆) |

0.9521 |

TF-IDF + SVD; PhoBERT embedding |

|

NLP BK (⋆) |

0.9513 |

Bert4news + PhoBERT + XLMR. |

|

SunBear (⋆) |

0.9462 |

RoBERTa + MLP |

|

uit kt (⋆) |

0.9452 |

PhoBERT + BERT4news |

|

Toyo-aime (⋆) |

0.9449 |

CNN + BERT |

|

Parabol (⋆) |

0.9397 |

x |

|

ZaloTeam (⋆) |

0.9378 |

vELECTRA |

|

UIT VLTN (⋆) |

0.8102 |

x |

DISCUSSION

Since there are many missing values appearing in this dataset and if we did not preprocess them carefully, they would reduce our model’s predictive performance. In preprocessing steps, we removed the clear outliers, and then the distribution of data would be less skewed. Therefore, the mean value is a suitable one to impute those missing data. Poulos & Valle (2018) also addressed that the mean value is important and preferred for quantitative features for supervised learning21.

Following the experimental results in

CONCLUSION

To recapitulate, we have conducted effective methods and fine-tuning strategies to detect untrustworthy news: fine-tuning the ViBERT, PhoBERT and vELECTRA models combined with numeric features by verbal words. Our best result belongs to vELECTRA when it achieved 0.9575 AUC score on this dataset. Through many experiments, we observed that in addition to the text data, we can leverage other features for classification because they may contain key information to help the model predict more accurately.

Because identifying the reliability of the news is even difficult for humans, it can be determined not only on the news’ content but also on related knowledge about users’ reactions. Thus, in the future, we propose to create a more elaborate dataset containing sources of news. The sources and authors that have a high percentage of fake news will be penalized strongly by the model. In contrast, in news that comes from accredited sources, authors will be more likely to be considered trustworthy news. Moreover, we want that the dataset will contain more images because we can take advantage of them since “a picture is worth a thousand words”, and images are the key pieces of evidence for verifying news. In addition, fake news problems exist not only in Vietnamese but also in other languages, and multilingual methods are worth considering for a future of less fake news. Therefore, in the future, we are going to improve the performance by multimodal and multilingual approaches.

Acknowlegdement

None

Author contribution

Khoa Pham Dang and Dang Van Thin discussed with each other and proposed the main ideas of this research. Khoa Pham Dang implemented, conducted the experiments and wrote the paper. Ngan Luu-Thuy Nguyen discusses the ideas and contributed to writing the paper. All authors approved the final version.

Conflict of interest

The authors declare that they have no competing interests.

Abbreviations

None